Securing the MCP Stack: Patterns and Pitfalls

Understanding MCP Architecture: Secure Foundations for Agentic AI Workflows

A deep dive into MCP architecture, security risks, and how to design safe, scalable agentic workflows for modern AI systems.

Introduction to The MCP Stack

As the landscape of AI-driven automation evolves, the Model Context Protocol (MCP) stands out as a transformative standard. It redefines how language models interact with the world, bridging AI systems with tools, APIs, and real-time data sources in a way that is both structured and scalable.

Think of MCP as the USB-C of AI. A universal port that allows large language models (LLMs) to plug into enterprise infrastructure, developer tools, and knowledge bases seamlessly. As enterprises rush to embed AI into core workflows, MCP is quickly becoming the backbone of secure, interoperable, agentic systems. But behind this powerful orchestration layer lies a complex architecture which brings with it, new attack surfaces and operational risks.

In this blog, we’ll unpack how the MCP stack works, where it shines, and what security-first design looks like in this new AI-native paradigm.

Why MCP Was Needed

Before MCP, every AI system that needed to interact with external tools relied on a fragile, bespoke integration pipeline. Each new data source required a handcrafted connector. Tool usage was inconsistent, and security was often an afterthought.

Enter: the MCP

It solves the above-mentioned issues by offering:

- Standardised tool access (via function-callable APIs)

- Real-time data exchange with structured messaging

- LLM-native design for agent workflows

- Plug-and-play integration for rapid deployment

From automating DevOps tasks to fetching patient data in healthcare, MCP enables LLMs to act with autonomy and precision. Not just think.

Key Features

- Standardised Tool Access: Replaces bespoke APIs with AI-ready, function-callable tools.

- Real-Time Data Flow: Ensures models operate on fresh, relevant information.

- Seamless Integration: Plug-and-play architecture accelerates deployment and minimizes engineering overhead.

- LLM-Native Design: MCP tools are designed with LLMs in mind, making them ideal for agent workflows.

Real-World Applications

From automating pull requests on GitHub to fetching real-time patient data in healthcare, MCP enables AI to operate more autonomously and effectively:

- Enterprise integration (e.g., CRM, cloud storage)

- Developer tools augmentation

- AI-powered support and diagnostics

With its promise of standardisation and seamless integration, MCP sets the stage for a new paradigm in AI-enabled systems. However, to understand how it delivers on this promise (and where potential vulnerabilities lie), we need to take a closer look under the hood.

Let’s break down the architecture that powers MCP and examine how its components work together to enable secure, scalable AI workflows.

Components of MCP

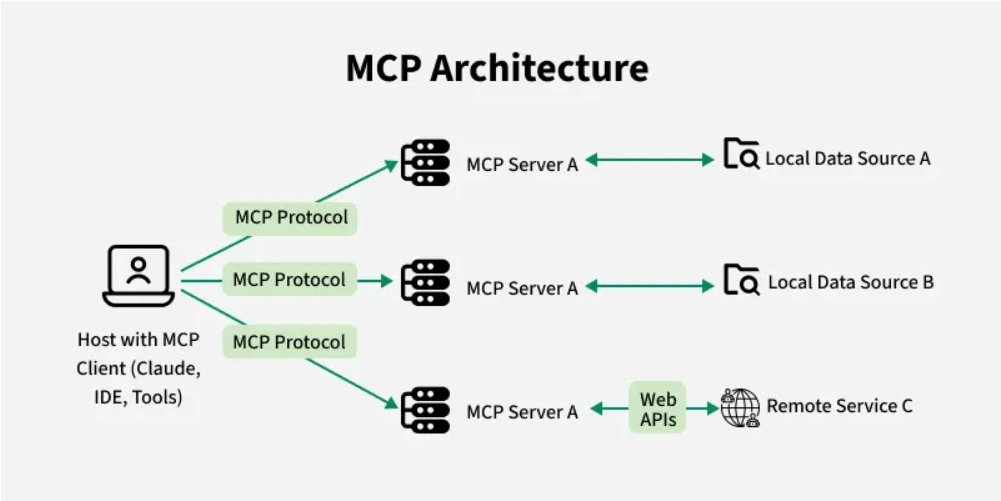

At the heart of the Model Context Protocol (MCP) lies a flexible, modular client-server architecture designed for structured, secure communication between AI systems and external data or tools.

This architecture comprises five key layers: Host, Client, Server, Protocol, and Transport, each playing a distinct role in enabling real-time, LLM-native interoperability.

1. Host: The User-Facing Entry Point

The Host is the AI-powered application that users directly interact with—whether it’s a chat interface, developer tool, or intelligent agent.

- Examples: Claude Desktop, ChatGPT, IDEs like Cursor, or LangChain-powered agents.

- Responsibilities:

- Capturing user input and routing it through an LLM.

- Managing permissions and orchestrating external tool access via MCP Clients.

- Presenting results coherently to the user.

- Capturing user input and routing it through an LLM.

2. Client: The Communication Bridge

Embedded within the Host, the Client manages a 1:1 connection with a specific MCP Server.

- It abstracts the protocol details, handles message formatting, and ensures structured interaction.

- Crucial for discovering and invoking the capabilities offered by Servers.

- Without Clients, the Host cannot access external systems or perform tool-based tasks.

3. Server: The Capability Gateway

MCP Servers expose the actual tools and data that AI models act upon.

- They act as lightweight wrappers around APIs, databases, or services.

- Deployment modes:

- Local (e.g., GitHub MCP server running on the user’s machine).

- Remote (e.g., accessing Google Drive or Slack over the internet).

- Local (e.g., GitHub MCP server running on the user’s machine).

- Servers describe their capabilities in a standardized schema, making them easy for Clients to query and invoke.

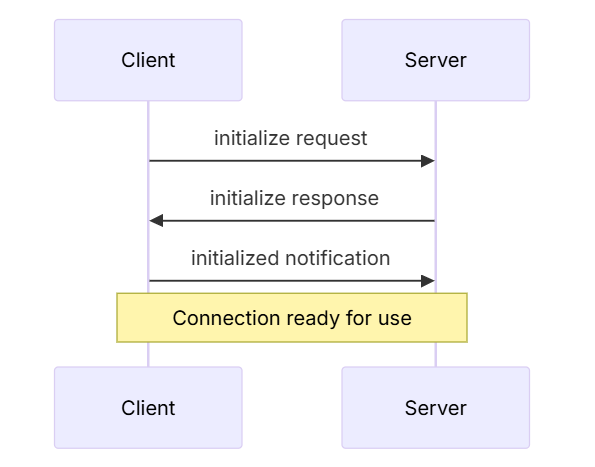

4. Protocol Layer: Structuring the Conversation

The Protocol layer defines how Clients and Servers exchange structured information.

- Responsible for message framing, linking requests with responses, and enabling advanced interaction patterns.

- Implements support for:

- Requests (expecting a response),

- Results, Errors, and

- Notifications (fire-and-forget messages).

- Requests (expecting a response),

- Built on top of JSON-RPC 2.0 for widespread compatibility and extensibility.

5. Transport Layer: Moving the Messages

This layer defines how messages are transmitted between Clients and Servers.

- Stdio Transport: Efficient local communication via standard input/output; ideal for CLI tools and desktop applications.

- HTTP Transport: Supports remote execution with streaming capabilities (e.g., via Server-Sent Events), making it best suited for network-based interactions.

- All transports ensure secure, reliable communication channels, forming the backbone of agent-to-tool interoperability.

How These Components Work Together

Here’s the typical flow in an MCP-enabled interaction:

- User issues a query via the Host.

- Host uses an LLM to determine required external tools.

- Client connects to the appropriate Server.

- Client discovers available capabilities.

- LLM or Host selects and invokes a tool.

- Server executes the request and returns the result.

- Host integrates the result back into the LLM context or UI.

This modular, decoupled architecture transforms the traditional integration landscape. Instead of every AI tool building custom connectors for each service (M×N complexity), MCP simplifies the process to a simple M+N problem, requiring each Host and Server to communicate with the MCP only once.

SWOT Overview of MCP Components

Now, as MCP adoption grows, so does the need to understand its architectural fault lines. Each layer of the stack (Host, Client, Server, Protocol, and Transport) comes with its own strengths and liabilities. A clear SWOT (Strengths, Weaknesses, Opportunities, Threats) analysis of these components reveals not only their technical boundaries but also where security design must be most vigilant.

Patterns & Pitfalls to Note

Securing the MCP stack is not just about hardening individual components. It’s about recognizing how flexibility, extensibility, and modularity can become double-edged swords.

Here are some of the most critical recurring patterns across the stack:

Flexibility ≠ Safety by Default

MCP’s modular design enables rapid prototyping and innovation in the form of plug-and-play servers, flexible hosts, and diverse transports. But this same flexibility can lead to inconsistencies in permission handling, schema enforcement, or authorization coverage.

The result? Secure-by-default becomes opt-in instead of guaranteed.

This is especially important given that authorization in MCP is optional at the protocol level but strongly recommended for HTTP-based transports.

Implementations that ignore this create blind spots. Clients might access protected resources without proper audience-bound tokens, or servers may misinterpret or mishandle access control due to lax validation.

Middleware Is a Fragile Middle Ground

Clients are meant to abstract away protocol details. However, in practice, they often become the weakest link. Version mismatches, outdated discovery mechanisms, or partial OAuth support can lead to misbehavior. This is especially dangerous in contexts where the MCP client is responsible for initiating the authorization flow and parsing WWW-Authenticate headers, yet lacks robust fallback or error-handling mechanisms.

This reinforces the need for:

- Strict version pinning

- Comprehensive protocol test coverage

- Consistent token validation logic

Extensibility Expands the Attack Surface

The Host and Server layers are designed to be extensible: tooling, behaviors, and even protocol adaptations can vary across deployments. But with extensibility comes risk:

- Malformed payloads can exploit lenient schema parsers.

- Rogue tools can escalate privileges.

- Race conditions in capability discovery can bypass expected checks.

Authorization helps contain some of this risk, but only if tokens are scoped with resource indicators (RFC 8707) and if servers strictly validate audience claims. Without this, token reuse across tools or services becomes a serious threat.

Standardization vs Innovation

The protocol and transport layers need to be stable enough to ensure interoperability, but not so rigid that they block evolution. Fragmentation here leads to incompatible stacks, orphaned tools, and ultimately a fractured security posture.

This is where the recently included OAuth 2.1 standards and discovery mechanisms like RFC 8414 and OpenID Connect become vital. They form the backbone of consistent, secure interoperability.

What This Means for MCP Security Design

The MCP stack is robust and forward-looking. But it demands discipline. And “Security” isn’t just a layer. It’s a mindset, embedded in every interface, handshake, and decision the stack makes.

Here’s a checklist built on real-world implementation lessons and the updated MCP:

✅ Secure Every Layer

- Hosts must sandbox tool execution and enforce per-invocation permission boundaries.

- Clients must validate schemas, handle failures gracefully, and never retry blindly.

- Servers must implement OAuth-based authorization, even if clients are permissive.

- The protocol should emit structured errors to avoid leaking internal state.

- Transport must default to TLS or equivalent encryption at all times.

✅ Treat Prompt Injection as a First-Class Threat

- Sanitize prompts and tool outputs at the LLM level using tooling like HiddenLayer’s AIDR.

- Red-team entire agent workflows—not just user input.

✅ Dynamic Permissioning, Not Static Grants

- Tools must reassert intent for sensitive actions like file writes or API calls.

- Avoid “allow all” permissions; design UIs that encourage deliberate approvals.

- Leverage OAuth token scopes and audience binding to minimize token overreach.

✅ Use Protocol Semantics Defensively

- Use prompt-engineered tool wrappers to enforce logging, soft-fail modes, or deny-lists.

- Deploy self-monitoring logic in agent loops to flag and halt anomalous behavior.

✅ Enforce Unique, Namespaced Tool Identity

- Adopt structured IDs (e.g., com.example.toolname) and display full provenance (tool + server origin) in UI layers.

- This is critical for token-based validation and authorization scoping.

✅ Watch for Mutation Attacks

- Enforce version pinning, manifest signing, and token hash checks in clients.

- Warn users or abort when unexpected tool updates are detected.

✅ Defend Against Multi-Tool Chaining

- Scope and sandbox each tool invocation independently.

- Audit the entire dataflow across a chain, not just isolated calls.

- Ensure that access tokens are never passed between tools—each token must be scoped to a single audience and issued by a trusted authorization server.

✅ Build Secure Defaults

- Enable authorization by default, especially over HTTP.

- Tool registries should require signed metadata and verified registration flows.

- Clients must fail closed—never silently switch to a fallback or insecure tool path.

Looking Ahead: MCP and the Future of Agentic Workflows

The recent adoption of MCP in Copilot Studio by Microsoft marks a shift toward knowledgeable, context-aware agents. MCP doesn’t just streamline integration. It enables agents to reason over live data, dynamically adopt tools, and select the best actions at runtime. This paves the way for more adaptive and autonomous agents, no longer limited to static, hardcoded flows.

At its core, MCP enables agents to understand, invoke, and interact with external tools and knowledge sources in real-time. With support for automatic action ingestion, dynamic tool updates, and secure API integrations, MCP transforms the agent from a static decision tree into a dynamic reasoning entity, capable of selecting the right tool for the job at runtime.

This unlocks powerful new patterns in agent design:

- Composable Capabilities: Agents can now leverage a wide range of MCP-enabled tools, whether internal APIs or external services, without requiring manual configuration or redeployment.

- Live Reasoning over Fresh Data: With real-time data access via MCP, agents can reason over up-to-date business logic and system state, eliminating the staleness that plagues static language models.

- Self-Updating Toolchains: Thanks to dynamic action updates, agents can automatically adopt improved tool versions and retire deprecated ones, thereby reducing the need for human-in-the-loop maintenance.

- Context-Aware Invocation: Each action brought in via MCP is enriched with structured metadata (inputs, outputs, descriptions), enabling the agent to select and chain tools based on contextual fit rather than hardcoded logic.

And this is just the beginning. As this architecture matures, we’re likely to see a shift toward declarative agent design, where developers define goals and constraints, and the agent (powered by MCP) selects the optimal toolchain on the fly. We can expect agentic workflows to become more reliable, interpretable, and secure by design.

Follow us along to learn how we at initializ.ai are participating in this phenomenon!

.png)

.png)